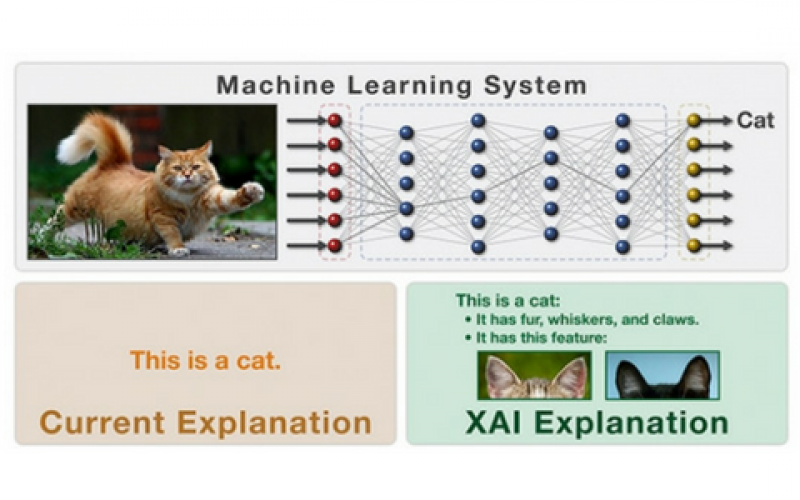

While Deep Learning is remarkable at pattern recognition, classification, and prediction, it falls short when it comes to personalization, explainability, and understanding its own rationale.

The underlying architecture of Kinetix’ Deep Reasoning XAI technology — a radically different AI algorithm design that overcomes Deep Learning’s shortcomings — is fundamentally different under the hood and purpose built for enterprise-grade, human-in-the-loop decision support systems.